January 22, 2026

You put a harness on a horse to steer it to where you want to go, like how AI harnesses guide a coding agent to the end objective of writing working code.

Everyone's talking about guardrails today. Claude Code has settings.json to restrict file access. NVIDIA has their NeMo Guardrails for enterprise. We ourselves created Fence as a container-free sandbox for terminal agents.

Guardrails are table stakes now. They're also not enough.

In the physical world, blinders are things you put on a horse to keep it from spooking. They restrict what it can see, which makes a horse with blinders on calmer. But a calmer horse doesn’t mean it’s going to go the right direction.

In this analogy, guardrails are blinders. They stop your agent from doing bad things, but they don’t necessarily help your agent do correct things. A harness is different. You put a harness on a horse to steer it to where you want to go, like how AI harnesses guide a coding agent to the end objective of writing working code.

There’s a meme going around about how we’re just replacing the term “wrapper” with “harness”.

Here's what people are missing.

The harness implies a connection between the rider and horse. It’s not random clothing you put on a horse to make it look nicer. The harness exists because there's someone holding the reins with information of the route and destination.

Similarly, building a harness for AI agents implies providing both:

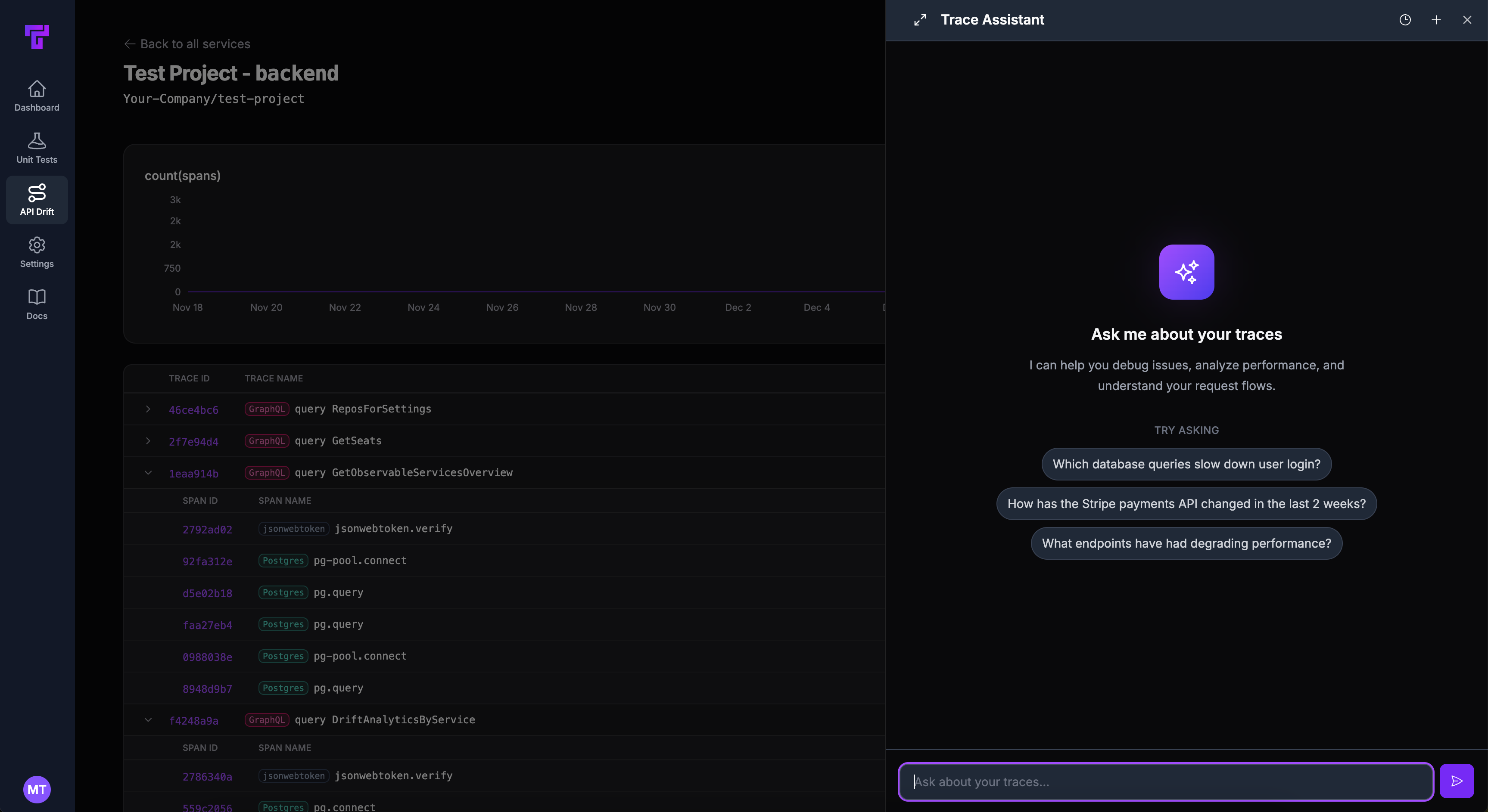

Take the coding example of fixing a bug with Claude Code. The route here would be your ability to verify that code changes are correct. You’d do that by generating and running unit/integration tests. The proverbial horse can then iterate on its path according to test execution output.

Proprietary data, which serves as “rider knowledge”, is the part of the harness that helps the agent decide what the goal is. For coding agents, this is your observability data that provides context for root cause analysis. Now the agent knows what exactly it needs to fix, just like the horse knows exactly where it needs to go.

If harnesses were just wrappers, you’d take the junk off the horse and it would still take the same path to the same place. That’s the critique of AI wrapper startups; they’re just fancy leather straps.

This should be the dominant philosophy of any AI company building harnesses. For us at Tusk, we’ve invested heavily into the complete flow of capturing observability data, generating tests, executing them, and sending the context back for iteration.

The tech industry spent a decade moving everything to the cloud. CI/CD pipelines, remote testing, serverless functions. Harnesses reverse this.

If your agent works locally, the feedback loops need to be local too. A coding agent can't iterate effectively if it has to wait 20 minutes for CI to report back.

The latency between making a change and knowing if it works is now measured in seconds, which means moving the tests and verification to where the agent runs (hint: CLI). The cloud pipeline is still important, but becomes more of a sanity check.

The human’s role changes in this world. You're reviewing harness output more than you review the actual code. And that means checking whether your unit tests pass or approval tests are catching a regression.

The proverbial horse is now a muscle-bound racehorse. If only we had something to steer it.